Semantic Search with Qdrant, Hugging Face, SentenceTransformers and transformers.js

Contents

Create a fully working semantic search stack with only Qdrant as vector database with built-in API and transformers.js using any huggingface model as your frontend-only embedding generator. No additional inference server needed!

Image courtesy Qdrant & Hugging Face.

Intro

There are many great pieces of AI software out there and - as crazy as it sounds - we can now even run ML models entirely in the browser! This means, that we can compute sentence embeddings in the client, avoiding the inference service in the backend. By using Qdrant, we can reduce the application complexity by another level and even save ourselves the application server.

All we need for a fully working semantic search engine is a dockerized Qdrant image in the backend and some lean JS code for the client!

Impatient? Jump to the implementation.

Components

Classic Setup

The traditional architecture of a machine learning application for semantic search might look something like this.

| |

Assuming that the vector database is already populated with documents and their respective embeddings, a typical query flow from a client would look like this.

A client wants to get the most relevant documents for the search term “Earth observation applications for agriculture”. The term is sent to the API-Server.

The API-Server receives the term.

- If the client would simply like to perform a full-text query, the database could be queried directly with the submitted string and the API-Server would send the results back.

- The more complex case, where a semantic search must be performed, requires more overhead. The term is hence first sent to the inferencing service.

The inferencing service consists out of two parts:

The tokenization pipeline applies certain tokenization rules to the query term. The easiest one would be to split the term by spaces:

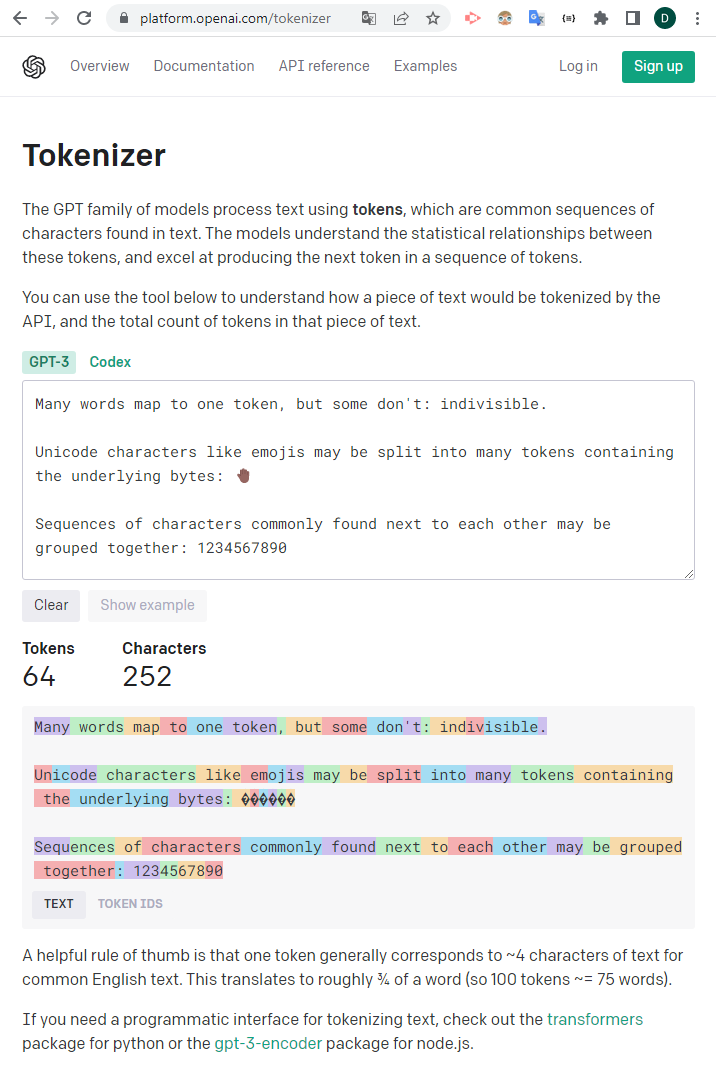

["Earth", "observation", "applications", "for", agriculture]. Note that there are many more rules for tokenization and that models on huggingface usually come with a dedicated file explaining these. See for example the tokenizer.json with a long set of rules and a custom dictionary which word is converted to which number.To see e.g. the GPT-3 tokenizer in action, openai offers a visually appealing online service to get the exact number of tokens for your prompt. Give it a try!

The actual model. The tokenized input is sent to the model, performing the heavy work of embedding calculation. The small models such as sentence-transformers/all-MiniLM-L6-v are usually very efficient (also due to Rust implementation) and do not require enormous resources. LLMs instead - large language models - such as the GPT-family instead do.

The embedding (= a vector, e.g.

[0.2345245234, -0.91243234, 0.23424355 , ...]) is used to query the vector database, retrieving the documents (e.g. through distance calculation between the query-vector and all document vectors with dot-product or cosine similarity). Eventually, the API-server sends the documents to the client.

Note that for a normal full-stack application you usually need at least two services running, a database and the application server. With machine learning models you need additional two services (depending on the application) for tokenization and the actual model which causes plenty of overhead.

Shifting workload to the client

What if we radically simplified the architecture and shifted some of the workload to the client?

| |

With this setup, there are two major changes in comparison to the traditional setup:

We shifted the heavy workload to the client by using transformers.js. The library loads (and caches) the model from hugginface and excutes it through WebAssembly right in the browser.

By using Qdrant, the Backend-API and the vector database become one! Qdrant has a built-in API for querying, so in theory there is no need for anything between.

I tested this setup on my old laptop with surprisingly good results. The initial load of the model might take a few seconds depending on your connection speed (and the huggingface response time). The actual application usage instead is blazingly fast and as the model cached in the clients browser, all successive calls will be instant!

Tensorflow vs. PyTorch

Tensorflow offers TensorFlow.js which works great out of the box! As of today, PyTorch unfortunately is not developing a JS-twin which is why we need transformers.js.

Implementation

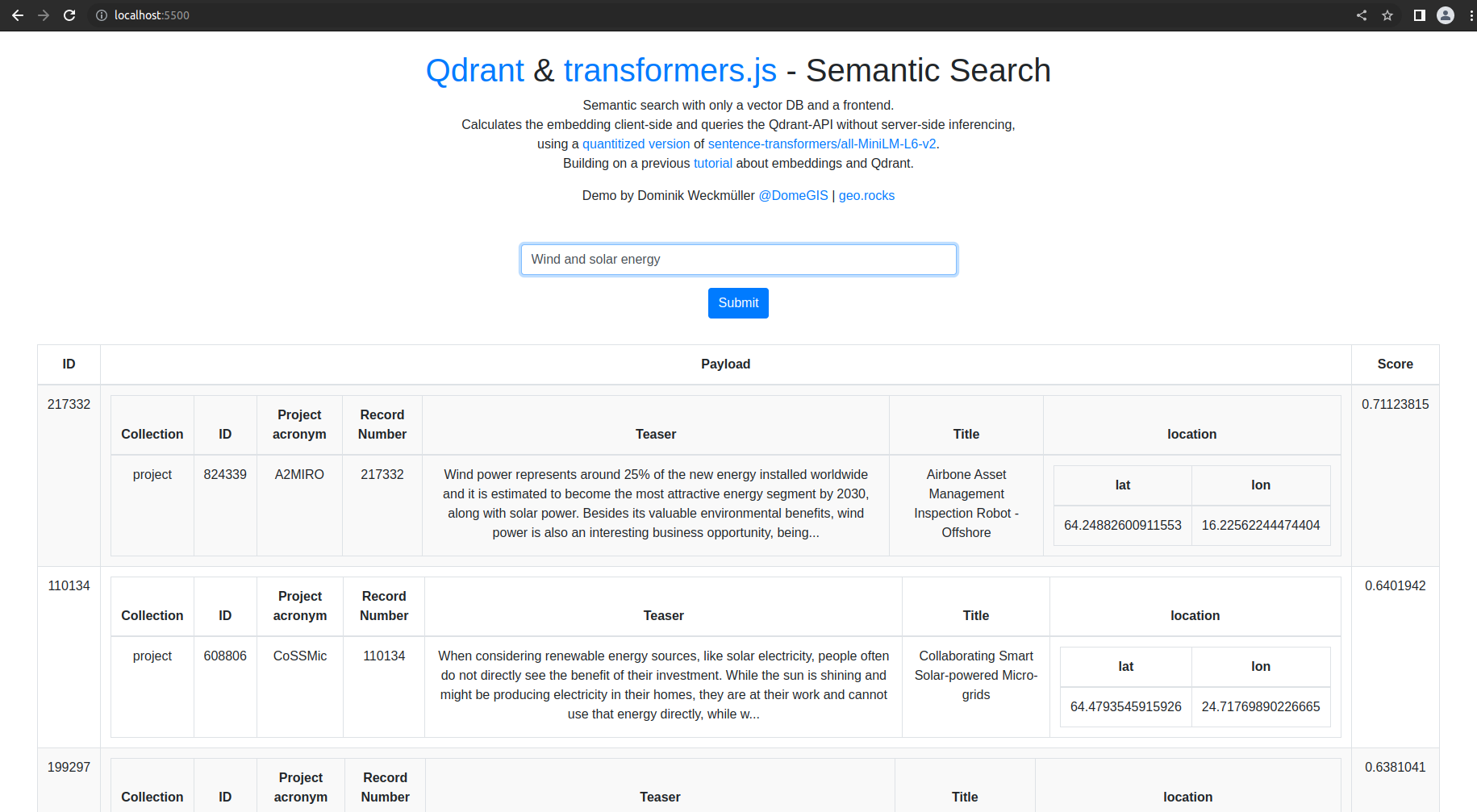

Demo here! (needs a locally running Qdrant instance, else just logs the calculcated embedding to the console)

It’s basically just plugging everything together: a simple bootstrap-based frontend, that loads the model first, activates the submit button and queries the Qdrant instance. The response elements are parsed and appended as table rows.

Test it yourself

If you would like to test it yourself, you’ll need a Qdrant instance with some dummy data. Head over to my previous tutorial to set up an instance with some test data from CORDIS in no time! I designed the template to serve my use case but feel free to remove the whole table logic.

When your Qdrant instance is running locally on localhost:6333 and you populated a test_collection, simply download the HTML document (all JS and CSS inline, it’s just one file) and open it directly in any browser (not even a server required!) or directly use the public instance with the mentioned hard-coded parameters.

Use cases

As there are many apps, either web apps, desktop software or mobile apps that make use of some kind of modified Chromium or Gecko Driver, chances are that the workflow presented here might come in handy. With transformers.js you easily bring your own PyTorch-model and save it either directly on your client’s disk (when installing) or cache it in the browser. Just think about the possible deployment speed when a new model is realeased and the hacky potential!

Just keep in mind that the setup described here might not be suitable if you have very large models or would like to offer a smoother user experience.

Disclaimer

- I am not affiliated with any of the companies or projects mentioned here but simply enjoy their great work for the AI community. Thanks!