Scripts and tricks to preprocess Instagram data mined with Fast-Instagram-Scraper. Get it on a map!

Scripts and tricks to preprocess Instagram data mined with Fast-Instagram-Scraper. Get it on a map!

Download some sample data

Skip this section if you downloaded some csv files already

You can explore Instagram locations under https://www.instagram.com/explore/locations. There you’ll find most countries subdivided into cities or particular spots subdivided again into actual locations with location IDs.

Let’s take the example of Hamburg (Germany). The link for the first location popping up under https://www.instagram.com/explore/locations/c560419/hamburg-germany/ is https://www.instagram.com/explore/locations/213110159/hamburg-germany/.

Taking a closer look at the logic you might spot some incoherences like doublettes, entirely wrong locations not even located in Hamburg or locations without coordinates. Yep it’s that messy. For quickly mining location IDs see a former blog post.

If you click on the latter you’ll see the most popular and after most recent posts.

By adding ?__a=1 to the URL a plain JSON will be returned that can just be downloaded. It looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| {

"graphql": {

"location": {

"id": "213110159",

"name": "Hamburg, Germany",

"has_public_page": true,

"lat": 53.55,

"lng": 10.0014,

"slug": "hamburg-germany",

"blurb": "",

"website": "https://www.hamburg.de/",

"phone": "",

"primary_alias_on_fb": "",

"address_json": "{\"street_address\": \"\", \"zip_code\": \"\", \"city_name\": \"Hamburg, Germany\", \"region_name\": \"\", \"country_code\": \"DE\", \"exact_city_match\": true, \"exact_region_match\": false, \"exact_country_match\": false}",

"profile_pic_url": "https://scontent-frx5-1.cdninstagram.com/v/t51.2885-15/e35/s150x150/128532887_684253752482790_5537570428220005328_n.jpg?_nc_ht=scontent-frx5-1.cdninstagram.com&_nc_cat=1&_nc_ohc=LRPnfQ6OO78AX9HE08v&tp=1&oh=720693179332ddfa0a9e3ff68634d06e&oe=5FEEB09B",

"edge_location_to_media": {

"count": 8261174,

"page_info": {

"has_next_page": true,

"end_cursor": "2454738483475066914"

},

"edges": [

{

"node": {

"comments_disabled": false,

"id": "2452345676542405",

"edge_media_to_caption": {

"edges": [

{

"node": {

"text": "some sample text for the first post displayed

...

|

This is how Fast-Instagram-Scraper works. It just downloads these JSON-Files one after another with a chunk size of approximately 50 posts each.

Assuming you downloaded Fast-Instagram-Scraper with

1

| git clone https://github.com/do-me/fast-instagram-scraper.git

|

or just downloaded directly via https://github.com/do-me/fast-instagram-scraper/archive/main.zip 🤷🏻♂️ and installed all its depencies via pip you can run a simple test scrape with the location ID 213110159 for Hamburg from above and one more for its city center 891901184:

1

2

| python fast-instagram-scraper.py 213110159 location --max_posts 100;

python fast-instagram-scraper.py 891901184 location --max_posts 100;

|

These few posts will be scraped in an instant and we are good to go! 🏃

Concat csv-files

Got a folder full of csv-files freshly mined like from the commands above? For preprocessing it’s easier to just deal with one file. Let’s concat it with Powershell (Ubuntu works similar) by creating a folder “merged” first and after a concatenation of all .csv files with:

1

| Get-ChildItem -Filter *.csv | Select-Object -ExpandProperty FullName | Import-Csv | Export-Csv .\merged\merged.csv -NoTypeInformation -Append

|

Of course if your files are too big it can take a while but let’s say up to a few gb there shouldn’t be any issue neither with Powershell nor with Python in the following steps.

Prepare the master table

Let’s get to some functions in the following snippets. The entire notebook can be found on my github repo.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

| # import packages

import geopandas as gpd

import shapely.wkt

import pandas as pd

from collections.abc import Iterable

from ast import literal_eval

import os

os.environ['PROJ_LIB'] = 'C:/Users/username/Anaconda3/Library/share/proj' # change this file path if needed

# load merged csv-file in pandas dataframe

df = pd.read_csv("merged.csv",encoding="utf-8")

# define some functions

# cleaner, removes unnecessary upper nodes

posts_without_text = 0 # just in case you can check later

def str_to_obj(x):

global posts_without_text

try:

return literal_eval(x)[0]["node"]["text"]

except:

posts_without_text += 1

return ""

# simple yet effective list flattener

def flatten(l): #[["k","k"],"k"] -> ['k', 'k', 'k']

for el in l:

if isinstance(el, Iterable) and not isinstance(el, (str, bytes)):

yield from flatten(el)

else:

yield el

# some stop characters but could also be entire stopwords such as "and" or "the" - should be done later though

stop_characters = [""," "]

# extracts hashtags from a string (see cell below for example)

def extract_hashtags(x):

hashtags_space_separated = [i for i in x.split() if i.startswith("#")] # normal hashtags #life #love -> #life, #love

hashtags_without_space = list(flatten([i.split("#") if i.count("#") > 1 else i for i in hashtags_space_separated])) # without space #life#love -> [#life, #love]

remove_hashtags = [i.replace("#","") for i in hashtags_without_space] # remove hashtags

remove_stop_characters = [i for i in remove_hashtags if i not in stop_characters] # remove empty items and space

return remove_stop_characters

|

This function extracts all classic hashtags (#normal #hashtag #logic), or hashtags written together (#all#togehter#here) but ignores other forms like hyperlinks (https://sample.com/#nohashtag). This will return most hashtags with the exception of free text and hashtags immediately after (awesome#crocodile). In case you need those, just change ‘i.startswith("#")’ to ‘i.count("#") > 0’ which will return all strings containing a hashtag instead of only strings beginning with a hashtag.

1

2

3

4

| extract_hashtags("people look at this #amazing #super#fantastic #greenpark https://sample.com/#nohashtag this#hashtagwillbelost")

# Result

['amazing', 'super', 'fantastic', 'greenpark']

|

Let’s apply the functions and go on with some trivial preparation for geopandas later:

1

2

3

4

| df["edge_media_to_caption.edges"] = df["edge_media_to_caption.edges"].apply(lambda x: str_to_obj(x)) # "denode" text

df["hashtags"] = df["edge_media_to_caption.edges"].apply(lambda x: list(set(extract_hashtags(x)))) # extract unique hashtags by using set

df["location_latlong_str"] = df["location_latlong"] # keep a column copy for later

df # print df

|

Now we have a nice dataframe with hashtags and can get some first insight. For example let’s have a look how many posts didn’t include any caption or hashtags by just typing posts_without_text/len(df). In my case it was around 8.5% which might be average.

Convert pandas dataframe to geopandas geodataframe in web mercator projection EPSG:3857

Converting a pandas dataframe to a geopandas geodataframe works quite straightforward. You just need to tell geopandas where to find latitude and longitude columns and it’s done.

1

2

3

4

5

6

7

8

9

| # create geodataframe from pandas dataframe

# prepare some columns

df["location_latlong"] = df["location_latlong"].apply(lambda x: literal_eval(x)) # convert to object

df["lat"] = df["location_latlong"].apply(lambda x: x[0]) # new col lat

df["long"] = df["location_latlong"].apply(lambda x: x[1]) # new col long

# pandas df to geopanas gdf

gdf = gpd.GeoDataFrame(df, geometry=gpd.points_from_xy(y = df.lat, x = df.long))

|

Swapping latitude and logitude

As a geographer there is simply no way of getting around x/y lat/long swapping slips at some point.

It will happen - just be warned 🐸

Let’s reproject to web mercator.

1

2

3

4

5

| gdf.crs = ("EPSG:4326") # set EPSG information...

gdf = gdf.to_crs("EPSG:3857") # ...and reproject to web mercator

# et voilà: a nice, reprojected geodataframe

gdf

|

The geodataframe is ready! Only for saving, some particularities apply as we cannot save objects to GeoJSON but must convert everything to plain numbers or strings.

1

2

3

4

5

6

7

8

9

10

| # GeoJSON format cannot store object data types, so all columns must be plain numbers or strings

# drop unnecessary column which cannot be saved due to list type

gdf = gdf.drop(columns=['location_latlong'])

# list to plain string

gdf["hashtags"] = gdf["hashtags"].apply(', '.join)

# save as GeoJSON but note that you have a GeoJSON with plenty of posts for the same points which might not be what you want

gdf.to_file('all_posts_original.geojson', driver='GeoJSON', encoding="utf-8")

|

Be warned that you just exported a large file and that you have the same coordinates multiple times. Technically it is just your input merged.csv enriched with hashtags and converted to a GeoJSON which can be fed into QGIS for example. But do not do this, depending on your file size it will take to long and is unnecessary (better avoid creating files larger than 200 mb).

Export unique coordinates with location name, ID and slug as GeoJSON

To get a first overview over your locations it is way better to just export the coordinates without the additonal post information. Pandas groupby statement does the trick.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| # just unique locations coordinate, slug etc. but no post info

# groupby does the trick here

uf = df.groupby(['location_latlong_str','location_id','location_name','location_slug'], as_index=False).count()

# rest as above

uf = uf[["location_latlong_str","location_id","location_name","location_slug"]]

uf["location_latlong_str"] = uf["location_latlong_str"].apply(lambda x: literal_eval(x)) # convert to object

uf["lat"] = uf["location_latlong_str"].apply(lambda x: x[0])

uf["long"] = uf["location_latlong_str"].apply(lambda x: x[1])

ugdf = gpd.GeoDataFrame(uf, geometry=gpd.points_from_xy(y = uf.lat, x = uf.long))

ugdf = ugdf.drop(columns=['location_latlong_str'])

ugdf.crs = ("EPSG:4326")

ugdf = ugdf.to_crs("EPSG:3857")

ugdf.to_file('unique_points_no_post_information.geojson', driver='GeoJSON', encoding="utf-8")

# for saving as geopackage

# ugdf=ugdf.loc[ugdf.lat.notnull()] # if needed remove null values

# ugdf["hashtags"] = ugdf["hashtags"].apply(', '.join) # can't save object, must be plain string

# without_nan_ugdf.to_file("unique_points.gpkg", layer='unique_points', driver="GPKG") # throws error on Widnows but works nonetheless

|

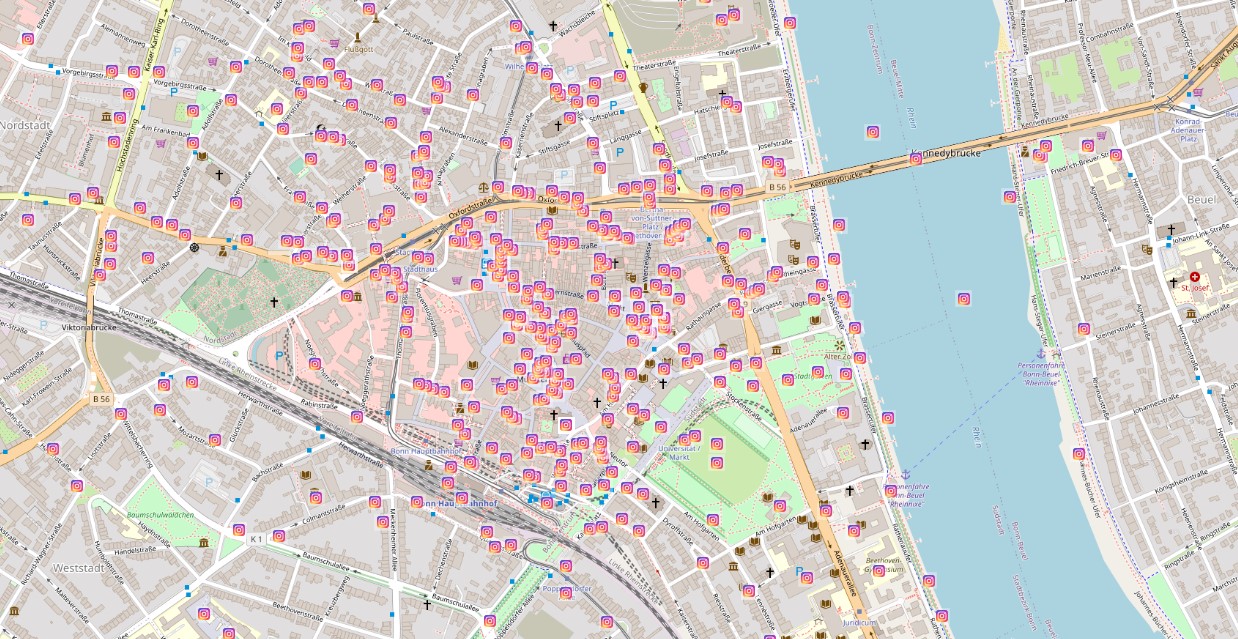

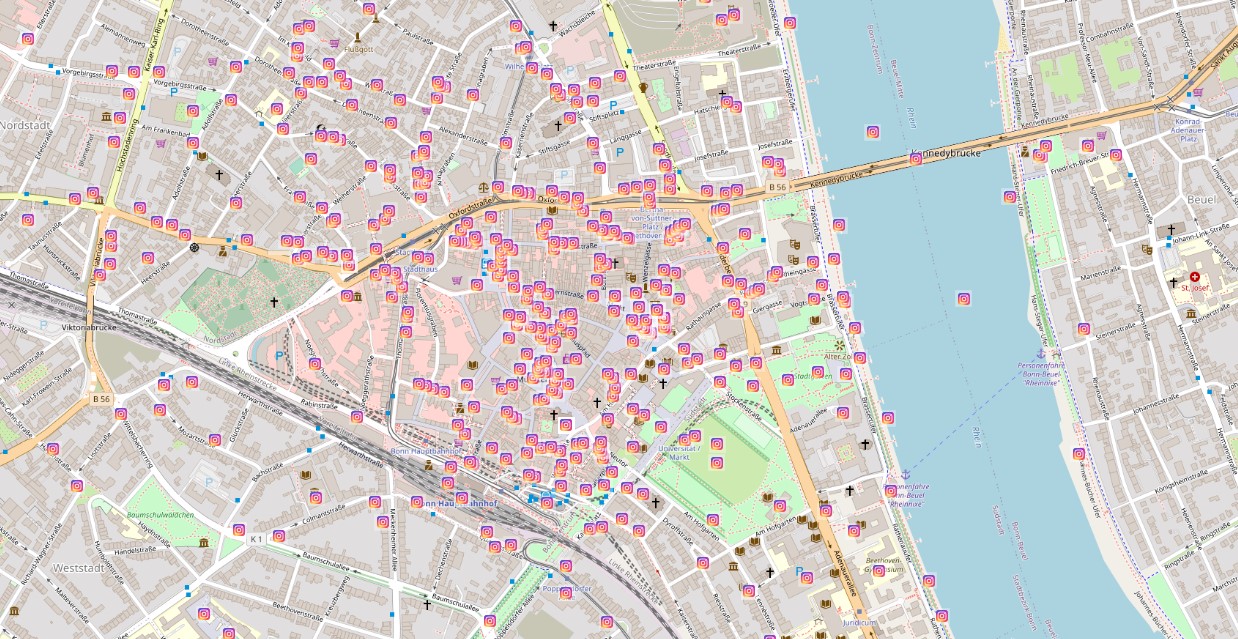

Just drag’n’drop this file in QGIS and you’ll get a basic visualization of where your location IDs are located! If you want to get a basemap and change the point markers to Instagram logo see my next post.

Export unique points with post information

If for some reason you still need the post information for every coordinate, you could go the following (interesting) way.

1

2

3

4

5

6

| # get unique coords and their posts, useful for filtering

un = pd.DataFrame(df.groupby('location_latlong_str'))#.filter(lambda group: len(group) == 1)

un[0] = un[0].apply(lambda x: literal_eval(x)) # convert to object

un["lat"] = un[0].apply(lambda x: x[0])

un["long"] = un[0].apply(lambda x: x[1])

un

|

Here we have created a pandas dataframe with groupby like above. But now we have additional sub-dataframes per location!

Thanks to the great pandas usability we could access the posts for the first location with un[0][0]. Note that the first zero is the not yet renamed column for posts and that the second zero is for the first entry corresponding with the post information dataframe for this particular location. This comes in handy for filters!

One more time, create a geodataframe, reproject and export. This time it’s a big file again.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # dataframes in dataframe column 1

un[1] = un[1].apply(lambda x: x.to_json()) # need to transform pandas dataframes to json for export

postgdf = gpd.GeoDataFrame(un, geometry=gpd.points_from_xy(y = un.lat, x = un.long))

postgdf = postgdf.drop(columns=[0])

postgdf.crs = ("EPSG:4326")

postgdf = postgdf.to_crs("EPSG:3857")

# rename columns

postgdf.columns = ["posts","lat","long", "geometry"]

postgdf.to_file('unique_points_with_post_information.geojson', driver='GeoJSON', encoding="utf-8")

postgdf

# if AttributeError: 'int' object has no attribute 'encode'

# column names cant be int must be str!

|

And there we are. With these preprocessed files we can go to the exciting analysis part such as sentiment analysis or topic modeling. Stay tuned for more posts! ☃️

Links

Repository: https://github.com/do-me/fast-instagram-scraper

Jupyter notebook with all scripts from above: https://github.com/do-me/fast-instagram-scraper/blob/main/A%20complete%20guide%20to%20preprocess%20Instagram%20post%20data%20mined%20with%20Fast%20Instagram%20Scraper.ipynb

Scripts and tricks to preprocess Instagram data mined with Fast-Instagram-Scraper. Get it on a map!

Scripts and tricks to preprocess Instagram data mined with Fast-Instagram-Scraper. Get it on a map!