Writing Your Own Scraper - Simple Instagram Scraper

Contents

How to write your DYI Instagram Scraper

As always frustration serves as initial motivation. Back in the good old mid 2020s I was quite happy with Instagram-Scraper but since the latest Instagram policy changes I was confronted with loads of 429 errors. Techincally they indicate that you made too many requests and therefore just got blocked - sometimes entirely.

The art of not getting blocked

Instagram has the nowadays standard infinite scroll logic on desktop browser and mobile to display more posts which makes it way harder in comparison to classic pagination where you just iterate through the pages. The problem is here that no matter what you do, you will get blocked at some point if you scroll or click through the posts more than a couple of hours. So the challenge is to mine as fast as possible and as slow as neccessary before getting blocked.

🏁 Where to start 🏁

In the beginning you should look for some kind of easy logic to get what you want. On Instagram desktop browser there are to ways to achieve what we want on mobile just one.

💻 + 📱

- Go to the hashtag/geolocation/user page and scroll down until you get blocked

- Scrape all a tags with the respective post id

- (Slowly) iterate through all the post ids and scrape all information

Step 1 will be the limitation as it determines the number of post ids we can get. After we can take more time and relax while iterating in step 2. In my experience it will be hard to get more than 8000 posts - I always ended up between 5000 and 8000. To accelerate step 1 make sure to disable caching either with selenium or directly in the browser by clicking F12, going to Network tab, clicking “disable caching” and most importantly leaving the console open!

💻

- Go to the hashtag/geolocation/user page and click the first post (recent, not top posts!)

- Scrape all information

- Click on next post arrow and repeat start over with step 2

The advantage here is that you scrape all data in real time, meaning if it fails at some point you already got your data in your database and simply continue.

Challenges ⛰️

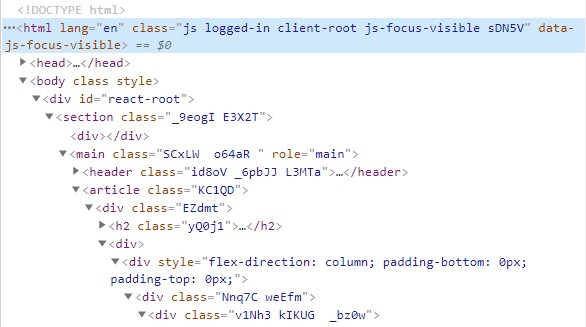

There are plenty of challenges involved here, as Instagram obviously doesn’t want people to scrape their data. Starting with their DOM you can’t access any element by ID but only by randomized classes which are altered every now and then.

So there is not really an easy way out but to change your classes from time to time.

The next one is harder to overcome as it involves a lot of trial and error. As mentioned earlier we need to imitate a human which means:

- Be slow

- Be random (out of an algorithms perspective)

- Pause now and then

Experience tells: the breaks are crucial.

Perks

With Simple Instagram Scraper on GitHub I wrote a highly customizable scraper you can easily alter, particularly the break times and intervals. It also scrapes the picture’s accessibility caption created through Facebook’s image recognition information (hidden in the image’s alt tag or the respective json) about every picture so you can avoid violating data privacy by not downloading any media. They serve well for topic modeling as we did for Instagreens-Bonn and look like this:

| |

More posts will follow soon. Stay tuned!

If you have any questions or comments, feel free to reach out to me! 🐘